Human Computer Interaction (HCI)

TOC

The HCI group conducts multiple projects related to human–computer interaction, aiming to make people’s lives more convenient and enriched. Currently, our research focuses on VR meta-work through remote robot operation and Internet of Realities for remote communication.

VR Meta-Work via Remote Robot Operation

We are working toward the realization of a “meta-work society” in which people can perform remote work from anywhere—such as their own homes—simply by wearing a VR headset. Using technologies such as the integration of stereo video and VR, as well as precise robot arm control, we have developed a remote work system. At present, we are aiming to further reduce operational load, optimize robot arm control, and design optimal VR user interfaces. We hope that this technology will contribute to solving social issues in Japan, such as labor shortages in the manufacturing industry and employment disparities between urban and rural areas.

Internet of Realities

We are developing MetaPo as a unified approach for connecting distributed physical spaces and cyberspace. With MetaPo, 360-degree video and audio displayed on spherical displays, together with RHS360, which integrates telerobotics technologies, enable physical interaction and non-verbal communication across different spaces.

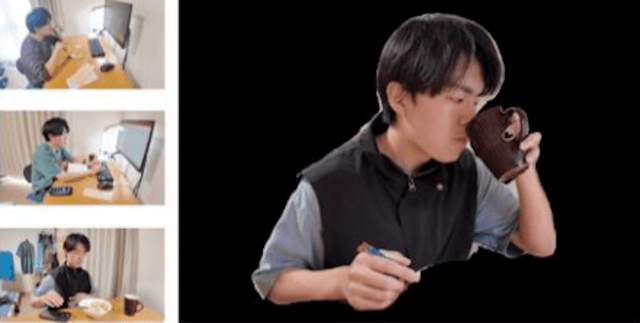

We have also developed Actstream, a system that dynamically identifies objects associated with users—elements that are often missing in remote communication—and shares them with communication partners. By doing so, Actstream enhances remote communication while preserving user privacy.